How Database Replication Works Under the Hood

Replicating data is a process you will encounter if you build a product. At the very least, you’ll want some kind of backup for your primary database, either through taking a snapshot or having a secondary “follower” database mirroring your primary data. You might also replicate data into a data warehouse for analysis, copy data to read-only replicas for load balancing, or replicate data while performing upgrades to your infrastructure.

That’s to say, database replication is a ubiquitous and helpful tool in database administration. So how does it work? How do you get data from one database to another?

It’s not quite as simple as just copying the data. If we were to do that, replication would become critically slow as the total amount of data increases. Instead, replication takes advantage of the built-in system for guaranteeing the atomicity and durability of your database commits–the write-ahead log (WAL).

Here, we want to show you how the WAL works in databases and how it is used for replication.

The WAL is the Database

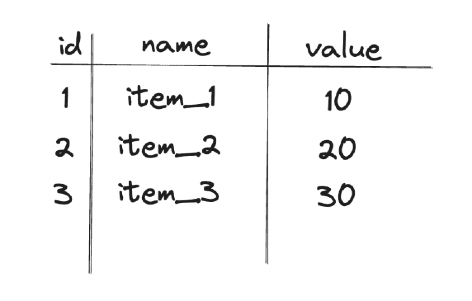

In relational databases, we model data as tables like this:

Tables have rows and columns, where each row represents a record, and each column represents a field within that record. This data-oriented view of databases makes sense for users, as we mostly care about the data in the database and how different fields relate.

But that isn’t the only way to think about a database. If you are trying to build a database, it makes more sense to see it from an operational perspective, where the database is a time-ordered sequence of events or changes. In that scenario, you’d just have a log of every transaction or modification recorded sequentially.

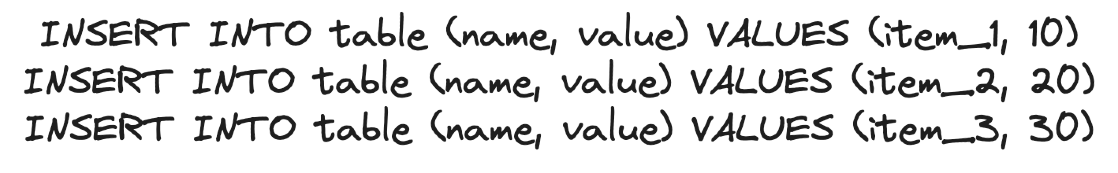

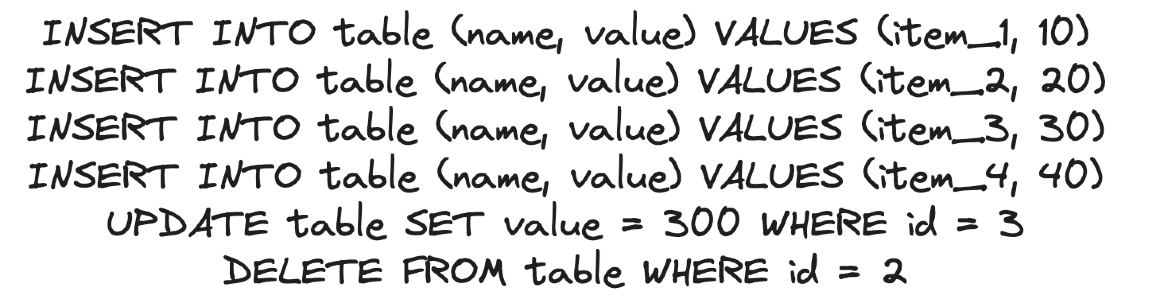

The table above becomes:

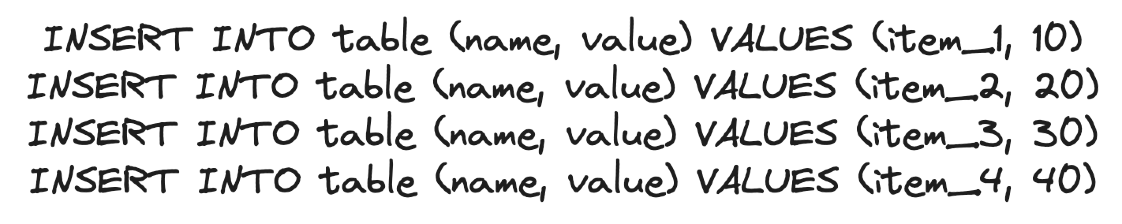

If we want to add another value, it is just appended to the log:

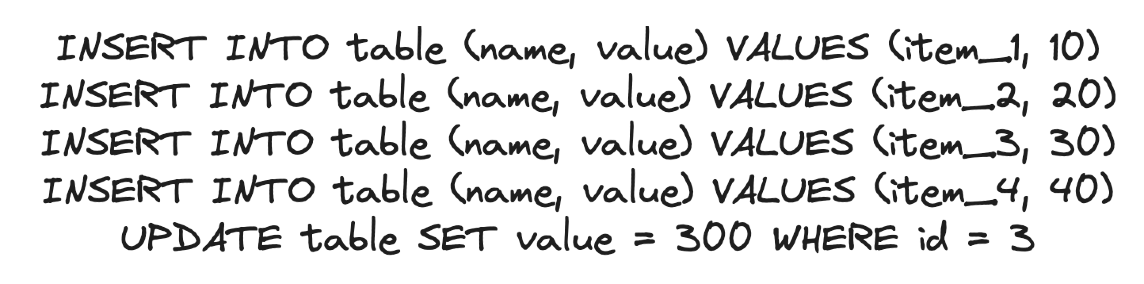

The same goes for any other operations. If we update an entry, the UPDATE is added to the log, but we can also still see the initial value from the INSERT:

Same with DELETE:

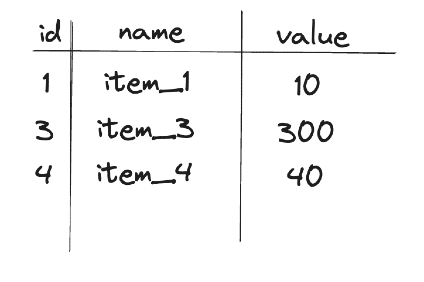

In the end, our table looks like this:

The data only represent the current state of the database. We don’t know how it got there. The logs provide a historical account of changes.

This chronological ledger is pivotal for both recovery and replication purposes. Write-ahead logging is a fundamental principle in database systems. Before any change is committed to the database files on disk, it is first logged in the WAL. This approach serves two primary purposes:

- Atomicity: In the event of a system crash or failure, the WAL is used to replay transactions, ensuring that all completed transactions are preserved, and all incomplete transactions are rolled back, thus maintaining the database’s consistency.

- Durability: The WAL ensures that it is not lost once a transaction is committed. By writing changes to a log before applying them to the database, the WAL provides a fail-safe against data loss due to unexpected failures.

In essence, the WAL acts as the backbone of the database's state. The log is the first point of contact for any change, making it the most up-to-date record of the database's evolution.

When it comes to database replication, logs are the source of truth. They provide a comprehensive and sequential record of all changes, making them ideal for replicating data across multiple systems.

- Replication Process: The primary database's log is continuously streamed to followers in log-based replication. Each change recorded in the log is replicated in the secondary systems. This ensures the replicas are an exact copy of the primary database, mirroring every change.

- Real-time Replication: With logs being the first to record any change, they enable near real-time replication. This is critical for applications requiring high data availability and up-to-date information across all nodes.

- Consistency and Reliability: Using logs for replication ensures that the data remains consistent across all replicas. Since the log records every transaction in the order they occur, the replication process respects this order, maintaining transactional integrity.

The log is more than a mere database recording mechanism; it is the authoritative ledger of all transactions and changes. Its role in replication is indispensable, particularly in systems like Readyset, where maintaining sub-millisecond latencies for query caching is critical. By leveraging logs' detailed, sequential nature, such systems ensure real-time, consistent, and reliable replication.

Logical Replication

There are three main types of replication:

- Physical Replication: Involves byte-by-byte copying of the database, replicating the exact physical state of the primary database. This method is commonly used in streaming replication.

- Snapshot Replication: Captures the state of a database at a particular point in time, akin to taking a "snapshot." This is less dynamic and is often used for less frequently changing data.

- Logical Replication: Focuses on replicating changes at a higher level–the level of database transactions. It allows for copying specific tables or rows and columns within tables, providing much more flexibility than physical replication.

Logical replication in database systems leverages the detailed information stored in database logs. In logical replication, instead of copying raw data, a replication agent reads the transaction logs and interprets the changes recorded in the logs. This interpretation converts the low-level format of the log (which is often storage and database-specific) into a high-level representation of the changes (like SQL statements or a similar format).

After interpretation, the changes are queued. This queuing mechanism ensures that they are delivered to the target database in the correct order, maintaining transactional integrity and consistency. The final step involves applying these changes to the target database by executing the high-level change records (like SQL statements) on the target database.

All this is achieved through a publish/subscribe model.

- Publication: In this model, the primary database defines a "publication," essentially a set of database changes– inserts, updates, deletes –that it is willing to publish.

- Subscription: The secondary systems, or subscribers, subscribe to these publications. They receive only the changes that are part of the subscribed publications, allowing for selective replication.

The publish/subscribe model in logical replication enables efficient distribution of data changes, as each subscriber can choose what data it needs. Subscribers can then receive updates in near real-time, making this method suitable for systems that require up-to-date information across multiple nodes.

This is easy to set up. Let’s do so using Postgres. On two separate database clusters, set up two independent databases, one called “primary_db”:

CREATE DATABASE primary_dbThe other is called “follower_db”:

CREATE DATABASE follower_dbIn both, create a table:

CREATE TABLE replicated_table (

id SERIAL PRIMARY KEY,

name VARCHAR(50),

value INT

);

In primary_db, populate this table with some data:

INSERT INTO replicated_table (name, value) VALUES ('Item1', 10);

INSERT INTO replicated_table (name, value) VALUES ('Item2', 20);

INSERT INTO replicated_table (name, value) VALUES ('Item3', 30);

INSERT INTO replicated_table (name, value) VALUES ('Item4', 40);

INSERT INTO replicated_table (name, value) VALUES ('Item5', 50);If you check the table in primary_db, you should see the data:

SELECT * FROM replicated_table;

id | name | value

----+-------+-------

1 | Item1 | 10

2 | Item2 | 20

3 | Item3 | 30

4 | Item4 | 40

5 | Item5 | 50

(5 rows)If you do the same in follower_db, it should still be empty:

SELECT * FROM replicated_table;

id | name | value

----+-------+-------

(0 rows)With the tables created, we can create the publication. On primary_db, create a publication for this table using the CREATE PUBLICATION command:

CREATE PUBLICATION my_publication FOR TABLE replicated_table;You can then check to see if that was successfully created by checking in the pg_publication_tables table:

SELECT * FROM pg_publication_tables WHERE pubname = 'my_publication';

pubname | schemaname | tablename

----------------+------------+-----------------

my_publication | public | replicated_table

(1 row)

Hop over to your follower_db and create a subscription to that publication using connection information from your primary database using CREATE SUBSCRIPTION:

CREATE SUBSCRIPTION my_subscriber

CONNECTION 'dbname=primary_db host=localhost port=5432'

PUBLICATION my_publication(note: if you want to try this on a local version of postgres, create your publication manually using “SELECT pg_create_logical_replication_slot(my_'subscriber', 'pgoutput');” on publishing_database and then add “WITH (create_slot = false)” to your CREATE SUBSCRIPTION command above on subscribing_database. Otherwise, CREATE_SUBSCRIPTION will hang.)

Now, if we check our table in follower_db again, we’ll see the data:

SELECT * FROM replicated_table;

id | name | value

----+-------+-------

1 | Item1 | 10

2 | Item2 | 20

3 | Item3 | 30

4 | Item4 | 40

5 | Item5 | 50

(5 rows)If we add a row to the table in primary_db:

INSERT INTO replicated_table (name, value) VALUES ('Item6', 60);We’ll immediately see the data in follower_db:

SELECT * FROM replicated_table;

id | name | value

----+-------+-------

1 | Item1 | 10

2 | Item2 | 20

3 | Item3 | 30

4 | Item4 | 40

5 | Item5 | 50

6 | Item6 | 60

(6 rows)

Our data is now being replicated. If we look into postgresql.log (this isn’t the write-ahead log, instead it is a log of what is happening with our postgres application), we can see what has happened:

STATEMENT: CREATE_REPLICATION_SLOT "pg_17529_sync_17519_7307265844192364778" LOGICAL pgoutput (SNAPSHOT 'use')

LOG: starting logical decoding for slot "pg_17529_sync_17519_7307265844192364778"

DETAIL: Streaming transactions committing after 0/A90190B0, reading WAL from 0/A9019078.The STATEMENT shows that we’ve created a new logical replication slot named pg_17529_sync_17519_7307265844192364778. The replication slot is created with the pgoutput plugin, the built-in logical decoding output plugin provided by PostgreSQL, used for logical replication. The “SNAPSHOT 'use'” part indicates that this slot will use the snapshot associated with the transaction running CREATE_REPLICATION_SLOT. So, this has captured the state of the database at a particular point in time.

The LOG and DETAIL indicate that logical decoding has started for the newly created replication slot. The message “Streaming transactions committing after 0/A90190B0, reading WAL from 0/A9019078” specifies the WAL location from which the replication is starting.

This means it will start streaming changes committed after the WAL location 0/A90190B0, and it's currently reading from the WAL location 0/A9019078. In WAL format of older versions of Postgres (<=9.2), the 0 is the segment of the WAL log, and A90190B0/A9019078 are the offsets within that segment. In newer versions, you get a single 64-bit integer pointer instead of two 32-bit integers

How Readyset Uses Logical Replication

In most caching mechanisms, replication isn’t used. Instead, developers must write their caching logic in the application layer to transfer data from a database to a cache.

Readyset works differently by taking advantage of the mechanism described above. Readyset registers itself as a consumer of a database’s replication stream, receiving the same information as a read replica–the log of changes made to the primary upstream database.

When you initially register a database with Readyset, we take a snapshot of that database. Readyset applies every write from the replication log to this copy of the upstream database. Cache misses don't go to the upstream database. Instead, the result is computed using the dataflow graph, and the result is cached. If any updated data in the replication stream is part of the dataflow graph for cached results, Readyset will update the cache using this new data.

In the context of Readyset, logical replication is not just about copying data; it's about maintaining high efficiency and performance in query caching:

- Sub-millisecond Latencies: Using logical replication, Readyset can ensure that the cache is updated almost instantly with changes from the primary database, maintaining sub-millisecond latencies.

- Consistency with Primary Data: The real-time nature of logical replication means that the cache is always consistent with the primary database, ensuring that the users get up-to-date and accurate data.

Logical replication with Readyset provides the flexibility, speed, and accuracy required for web-scale products. If you want to start with Readyset, sign up today!